Nov 19, 2019 What are Teradata Surrogate Keys and how should they be used? What's the best way to generate them on Teradata? This article will answer all your questions. Surrogate keys may or may not be supplied on update and delete transactions. But in terms of the semantics that have to be carried out, these distinctions don't matter. Regardless of how we design the process of assigning surrogate keys, the important point is that it is not just a matter of requesting a unique key value for an insert transaction. Performing data transformations is a bit complex, as it cannot be achieved by writing a single SQL query and then comparing the output with the target. For ETL Testing Data Transformation, you may have to write multiple SQL queries for each row to verify the transformation rules. To start with, make.

- Surrogate Keys Generated During Etl Process 2017

- Etl Development Process

- Surrogate Keys Generated During Etl Process System

his article is to explain how to implement the Surrogate keys from a logical dimensional model to a physical DBMS. There have been some clear cut guidelines on this matter from Ralph Kimball & Group but some people still manage to make a mess out of it , so it seems that it should be explained in finer details. This article has taken all the concepts from Ralph Kimball’s writing & only aims to explain them further for audience who may still have some doubts.

Because surrogate keys are system-generated, it is impossible for the system to create and store a duplicate value. Surrogate keys apply uniform rules to all records. The surrogate key value is the result of a program, which creates the system-generated value. Any key created as a result of a program will apply uniform rules for each record. 2) It has no meaning. You will not be able to know the meaning of that row of data based on the surrogate key value. 3) It is not visible to end users. End users should not see a surrogate key in a report. Surrogate keys can be generated in a variety of ways, and most databases offer ways to generate surrogate keys.

-What is a Surrogate Key?

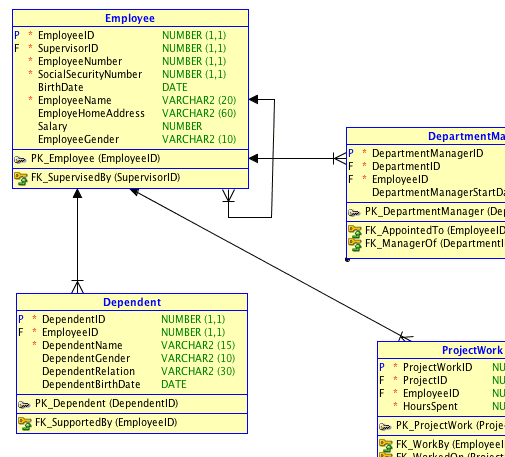

By definition a surrogate key is a meaningless key assigned at the data warehouse to uniquely identify the values of that dimension . For example look at the typical star schema of logical dimensional model below-

Here all the dimensions are connected to central fact table in a one-many relationship. This relationship is identified by a surrogate key which acts as a primary key for that dimension (ie.e one surrogate key for each of dimension like Geography , Vendor , product etc ) and same is refered as the foreign key in the fact table.

-Surrogate Key in Physical DBMS Layer

While the surrogate is used to establish a one-many relationship between fact & dimension table where it acts a primary-foreign key identifier in dimension and fact table respectively , it must be kept in mind that this is a logical relationship and same is not meant to be implement in the database layer using the “Primary Key” method available in the database function. As a thumb rule we do not implement any keys or constraints in the physical layer and same is not required in the data warehousing systems .

In DW systems & in particular our dimensional its not relevant.They act as burden to ETL load & are cause of load failures & bring dw to its knees at times. As Ralph Kimball says below (which I think anyone with common sense can deduct) is that in DW keys /constraints enforced at physical level don’t add any value as they are process oriented objects unlike operational systems where they are entity identifier objects.

So the first point being that we dont “need” to enforce a primary key via database in an OLAP env since it is a controlled env loaded only through ETL & so not prone to random updates like OLTP env. The logic to generate & maintain the surrogate keys is already part of the ETL layer.

Apart from this surrogate keys as primary keys enforced at database layer comes with many serious drawbacks

– Surrogate keys are maintained using a SCD (slowly changing dimensions ) logic which determines whether a dimension row key need to updated , added new value or retain old value. This is driven by whether than col is SCD1 or 2 or 3 type and same is driven by business requirements. In such scenario a auto-generated primary key at database level implemented for a surrogate key can be a serious troublemaker . This can throw whole SCD logic out of window giving unexpected results Parallels desktop 10 activation key generator mac no survey.

Surrogate Keys Generated During Etl Process 2017

– Further a surrogate key is performing the function of a primary key logically in a “given context” of a data mart. This means if we were to re-use same dimension as a shrunk dimension in another data mart where the grain is different then it will not be unique at that level.

– Apart from this any database level operations adds a burden to ETL load in terms of reducing its performance. Any more database level keys will mean more IO , more CPU and less time for the ETL load to perform. This can become a nightmare if your data-warehouse contains a sizable chunk of data & is normally a cause of load failures as well.

-Foreign Key Constraints (RI) in DBMS Layer

While surrogate keys “logically” acts as a foreign key in the fact table , these foreign key constraints (Referential Integrity ) are also not required to be implemented in the DBMS layer. As Ralph Kimball says below this also proves to be more of problem than any value addition.

To explain this further here are few more points

– A data is loaded into the fact table only after data is verified and cleaned and loaded to dimension table. So there is no purpose served of implementing a RI at a fact table level.

– A Fact table is voluminous table with millions of rows , and implementing RI is going to make it prone to ETL load failures as FK key enforcement incurs huge cost in terms of CPU & IO load on server.

– Some people chose to have a FK key constraint in fact table but NOT enforce them . This is normally done for DB2 systems as it is suppose to use this info for aggregate navigator in terms of MQT. Refer to below link for more details

This aspect is misleading normally because MQT are not used automatically. For reports normally a BIT tool is used & whichever MQTs we create will be used through BI Tool & so BI Tool query rewrite handles it well. In fact in almost all cases MQTs that we will use will be built for “a” report and so MQTs is used directly in all the cases. Further MQTs when created , since they hold the data , the querying on them is isolated from source tables. The implementation of MQT through BI Tool is the information where aggregate navigator is not used at all.

Apart from this the aggregate navigator optimization level of DB2 is not known to give good results and we can always derive better results by explicitly enforcing these things. MQT on the top of is only known to make matters worse and MQT refresh is single most reason for ETL failures in our observations.

-Political Challenge from DBA team

While all this approach is correct in theory , the biggest challenge comes in going forward with this is not technical but a political challenge from DBA team which Ralph Kimball also hinted at . DBA team who is not aware of data warehousing systems can be a nightmare to work with in such cases. Few points can be helpful in explaining them the superfluous nature of implementing keys & constraints at the DBMS level.

– Implementing keys & constraints at physical layer is duplicating the effort of ETL team. This part is already taken care of by ETL team in its code.

– A good ETL means no garbage or random updates to data warehouse. So implementing such things at physical level is an oxymoron suggesting our ETL jobs is poor.

– Try a demo of ETL load with keys & constraints and without them , showing why they are performance killer.

– Finally try telling them OLAP and OLTP systems are poles apart and things totally different in OLAP world .

- ETL Testing Tutorial

- ETL Testing Useful Resources

- Selected Reading

Performing data transformations is a bit complex, as it cannot be achieved by writing a single SQL query and then comparing the output with the target. For ETL Testing Data Transformation, you may have to write multiple SQL queries for each row to verify the transformation rules.

Monster Hunter Generations Ultimate Beginner Tips Experiment With the Hunter Styles and ArtsOne of the cool things about Monster Hunter Generations Ultimate is that you can play around with various styles and arts, which add a lot more depth to the game’s combat beyond just pressing two buttons to hit a monster. Monster hunter generations key quests story. These missions aren’t exactly exciting, and a lot of them are pretty slow-moving and boring, but stick with it; they’ll teach you all you need to know about the different gathering nodes found across the map, how to cook your raw meat, and how to hunt and capture a monster.The training quests also reward you with a fair bit of zenny, which you can put towards new equipment and upgrades later on in Monster Hunter Generations Ultimate, so it’s not a total waste of time.

To start with, make sure the source data is sufficient to test all the transformation rules. The key to perform a successful ETL testing for data transformations is to pick the correct and sufficient sample data from the source system to apply the transformation rules.

Etl Development Process

The key steps for ETL Testing Data Transformation are listed below −

Surrogate Keys Generated During Etl Process System

The first step is to create a list of scenarios of input data and the expected results and validate these with the business customer. This is a good approach for requirements gathering during design and could also be used as a part of testing.

The next step is to create the test data that contains all the scenarios. Utilize an ETL developer to automate the entire process of populating the datasets with the scenario spreadsheet to permit versatility and mobility for the reason that the scenarios are likely to change.

Next, utilize data profiling results to compare the range and submission of values in each field between the target and source data.

Validate the accurate processing of ETL generated fields, e.g., surrogate keys.

Validating the data types within the warehouse are the same as was specified in the data model or design.

Create data scenarios between tables that test referential integrity.

Validate the parent-to-child relationships in the data.

The final step is to perform lookup transformation. Your lookup query should be straight without any aggregation and expected to return only one value per the source table. You can directly join the lookup table in the source qualifier as in the previous test. If this is not the case, write a query joining the lookup table with the main table in the source and compare the data in the corresponding columns in the target.