- Could Not Generate Key Using Kms Key Alias Credstash Windows 10

- Could Not Generate Key Using Kms Key Alias/credstash

- Could Not Generate Key Using Kms Key Alias Credstash Key

»Resource: awskmsalias Provides an alias for a KMS customer master key. AWS Console enforces 1-to-1 mapping between aliases & keys, but API (hence Terraform too) allows you to create as many aliases as the account limits allow you. » Example Usage. Aug 28, 2017 You are not required to use the helper snippets if you don't want to, but those can be very helpful in the long run, especially if some time later you choose to customize the KMS key name, DynamoDB table or simply try to use credstash in another AWS region. My aws account is in us-west-2 region. And the KMS key created in that account has ARN arn:aws:kms:us-east-1::key/. In my node module, I am using Credstash to decrypt the key which is encrypted using the KMS key.

One of the most important problems of modern cloud infrastructure is security. You can put a lot of efforts to automate the build process of your infrastructure, but it is worthless if you don’t deal with sensitive data appropriately and sooner or later it could become a pain.

Most of the big organisations will probably spend some time to implement and support HashiCorp Vault, or something similar, which is more ‘enterprisy’.

In most cases though something simple, yet secure and reliable could be just sufficient, especially if you follow YAGNI.

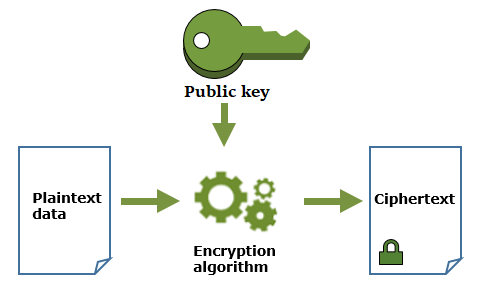

Today I will demonstrate how to use a tool called credstash, which leverages two AWS services for it’s functionality: DynamoDB and KMS. It uses DynamoDB as key/value store to encrypt and save the secrets with KMS master key and encryption context, and everyone who has access to same master key and encryption context can then decrypt the secret and read it.

From user perspective, you don’t need to deal with neither DynamoDB nor KMS. All you do is store and read your secrets using key/value and context as arguments to the credstash.

So let’s go straight to terraform code which we will use to provision DynamoDB and KMS key, the code is in my credstash terraform repo, main.tf:

The code above will create everything credstash needs to function, which is DynamoDB table called credential-store and KMS key called alias/credstash.

We also will save KMS key id for later use, output.tf:

You can check the result in the console:

Crt and key files represent both parts of a certificate, key being the private key to the certificate and crt being the signed certificate. It's only one of the ways to generate certs, another way would be having both inside a pem file or another in a p12 container.  Jul 08, 2009 For testing purpose, you can generate a self-signed SSL certificate that is valid for 1 year using openssl command as shown below. You can use this method to generate Apache SSL Key, CSR and CRT file in most of the Linux, Unix systems including Ubuntu, Debian, CentOS, Fedora and Red Hat. Generate CRT & KEY ssl files from Let's Encrypt from scratch. Ask Question Asked 1 year, 7 months ago. Active 9 months ago. Viewed 10k times 12. I'd like to generate a CRT/KEY couple SSL files with Let's Encrypt (with manual challenge). I'm trying something like this: certbot certonly -manual -d mydomain.com. Extracting Certificate.crt and PrivateKey.key from a Certificate.pfx File. A certificate.crt and privateKey.key can be extracted from your Personal Information Exchange file (certificate.pfx) using OpenSSL. Follow this article to create a certificate.crt and privateKey.key files from a certificate.pfx file. Steps to generate a key and CSR. To configure Tableau Server to use SSL, you must have an SSL certificate. To obtain the SSL certificate, complete the steps: Set the OpenSSL configuration environment variable (optional). Generate a key file. Create a Certificate Signing Request (CSR).

Jul 08, 2009 For testing purpose, you can generate a self-signed SSL certificate that is valid for 1 year using openssl command as shown below. You can use this method to generate Apache SSL Key, CSR and CRT file in most of the Linux, Unix systems including Ubuntu, Debian, CentOS, Fedora and Red Hat. Generate CRT & KEY ssl files from Let's Encrypt from scratch. Ask Question Asked 1 year, 7 months ago. Active 9 months ago. Viewed 10k times 12. I'd like to generate a CRT/KEY couple SSL files with Let's Encrypt (with manual challenge). I'm trying something like this: certbot certonly -manual -d mydomain.com. Extracting Certificate.crt and PrivateKey.key from a Certificate.pfx File. A certificate.crt and privateKey.key can be extracted from your Personal Information Exchange file (certificate.pfx) using OpenSSL. Follow this article to create a certificate.crt and privateKey.key files from a certificate.pfx file. Steps to generate a key and CSR. To configure Tableau Server to use SSL, you must have an SSL certificate. To obtain the SSL certificate, complete the steps: Set the OpenSSL configuration environment variable (optional). Generate a key file. Create a Certificate Signing Request (CSR).

Now we are ready to use credstash. Although creation is very simple, the most of the efforts is actually spent setting the permission in the right order!

So let’s create 3 EC2 instances: admin, dev and a hypothetical qa user, the code is in my credstash_example repo, main.tf :

All instances are pretty much same, they all use Ubuntu xenial from latest ubuntu ami which is retrieved with data.tf:

Then we install credstash and python(in which credstash is written) using user_data, setup.sh:

They all use same security group so we can ssh to instance and instance can install software from the web, it could have been obviously more stick and just enable 80/443, but for purposes of our ephemeral instances which will only live sadly few minutes and the die, it is ok, security-groups.tf:

The only difference is they all use separate iam_instance_profile, because we need to specifically grant permissions for table read/write and KMS key and context.

So let’s see what we need for that, start with admin, iam_admin.tf:

Admin has the most of the permissions, it has two iam role policies for KMS, kms:GenerateDataKey and kms:Decrypt, so it can both encrypt and also decrypt without any contextual restrictions. Then it also has two iam role policies for DynamoDB, in summary giving it access to create, delete, and query the table.

Dev on the other hand can only query the table and decrypt with KMS role/dev encryption context, iam_dev.tf:

Could Not Generate Key Using Kms Key Alias Credstash Windows 10

The only difference of iam_qa.tf, is it can decrypt with KMS role/qa encryption context accordingly.

Lets’ spin up the instances and see them in action. In order to ssh to instance we going to use output.tf to print the IPs:

Once terraform is applied in credstash and then credstash_example we will get the output:

Let’s ssh to admin first:

By default credstash is using us-east-1, so it fails to list they secrets, as we don’t have permission

to assume role in this region, we then provide the ‘eu-west-1’ which the region we used to create KMS and dynamodb.

Please note it is not stopping us to spin our instances in different region, as they run in ‘eu-west-2’ actually.

Let’s create some secrets now:

First we create secret for ‘role=dev’ context, please note the very key is arbitrary named as a ‘role’, it could have been anything,

like ‘env’ etc, the crucial point though to make sure readers will have appropriate access when setting ‘kms:EncryptionContext’.

Could Not Generate Key Using Kms Key Alias/credstash

So next we try to update it, it fails because it exists already, so we add again with ‘-a’ argument, which appends new records

with new version. It helps to always rollback to previous version. We then create another secret with qa context and finally list them.

Now let’s connect to dev box and see what we can do there:

We can get our password ‘db_pass_dev’, the list all secrets, but when we try to ‘sneak’ into qa’s ‘db_pass_qa’ password, it fails,

as key hasn’t been created with ‘role=dev’ context, then we try correct context and fail again with AccessDeniedException error, this time as don’t have permission to use ‘role=dev’ context.

Now let’s finally see what qa can do, this time I won’t ssh but simply run command remotely:

Could Not Generate Key Using Kms Key Alias Credstash Key

And I could retrieve db_pass_qa secret with ‘role=qa’ context.

As you can see installing and using credstash is extremely easy, the only thing required is setting the right IAM permissions to right users/ec2 instances and you all done.

If you need a simple way to safely store and use sensitive data in a cloud in production then credstash could be considered as a simplest way to go.

Hope you enjoyed the blog post and you can find all code used here in https://github.com/kenych/terraform_exs

In the previous example, we created an EC2 instance, which we wouldn’t be able to access, that is because we neither provisioned a new key pair nor used existing one, which we could see from the state report:

As you can see key_name is empty.

Now, if you already have a key pair which you are using to connect to your instance, which you will find

in EC2 Dashboard, NETWORK & SECURITY – Key Pairs:

then we can specify it in aws_instance section so EC2 can be accessed with that key:

Let’s create an instance:

As you can see key_name is populated now, so we associated our instance with the existing key, meaning we can now use it to connect.

Let’ check the public_ip first:

We are ready to connect now, I will run ssh with the command to get release info:

If you can’t connect and getting ‘Operation timed out’:

make sure you can access port 22 on the other side, quick tcpdump will show something like below:

As you can see your ssh client sends series of synchronisation requests([S]) and doesn’t get anything back. Normal sequence would be something like:

with a series of SYNC/SYNC ACK/ACK – if you want to know more about TCP handshake read this article which explains this in detail

So as you can see:

it is using ‘default’ security group, now go to VPC dashboard, security group:

and make sure ssh/22 port is added to your ip address or all(0.0.0.0/0).

Provisioning a new key pair.

Now, let’s say you don’t have any keys, or you just want to provision a new key just for this EC2 instance.

Let’s destroy our instance first:

and then reprovision again with a new key, for this, you will need to generate a key first:

We now have two files:

We will need to provision public key, and keep private key safe and hidden:

As you can see we added key_name to aws_instance resource and defined public_key inside aws_key_pair resource,

alternatively you could refer to file as well instead putting contents, it is actually more preferable as less chances to make copy-paste mistake.

Let’s connect and show the key is added: